We’re continuing our conversation about scaling your mortgage business through referrals. If you missed last week’s post covering the three keys to blowing up your real estate referrals, go back and read that gem. To recap, you want to ditch the cheesy sales pitch, be genuine with social media engagement, and leverage the co-branding abilities of your digital mortgage tools.

In this week’s article, we’re going to uncover reasons that despite your efforts, you’re still not getting a steady stream of qualified leads from your real estate partners and clients. Before we start digging in, you must adopt the right mindset.

Remember that getting leads –from any source –requires sustained, nurturing effort.

Just like you can’t expect a prospective borrower to convert into a client after just one conversion or after sending one email, you can’t expect referrals to pour in when you’re applying sporadic, half-assed effort. Resolve that referrals are a critical part of your lead generation and that you’ll work at it with the same drive as other lead gen avenues every day.

Worst Mistakes When It Comes to Getting More Mortgage Referrals

Not Asking For Referrals / Lack of Promotion

Many mortgage professionals struggle to ask for referrals, often due to doubt. It could stem from low confidence in their competence or uncertainty about their value to the client. Fear of rejection or looking desperate is another possible reason some mortgage pros fail to ask for referrals.

One way to combat doubt or fear is to essentially “get out of your head.” Move the focus from your needs and wants to your prospect’s needs and wants.

Consider how much value you bring to others’ lives. Your professional financial advice, guidance filled with good intentions, and assistance in taking care of the most significant financial decision most will make in their lives are extraordinarily critical.

And when you pair that expertise with empathy, your distinction truly becomes invaluable. So Focus less on you, and more on them

Not Communicating or Expressing Appreciation Enough

It’s essential to connect with your partners continuously. Not in a nagging way but in a “fanning the flame” way. Let your referral partners and former clients know how much you appreciate them. Send them valuable communication like:

- Thank you: Send them a card, email, or social media shout-out expressing your appreciation for them.

- Updates: Did you share an upcoming event in your last communication with them? Did they share something that they were anticipating? Send a quick update and ask for an update for some fresh, meaningful engagement.

- Follow-through: Did they ask you for additional information or recommendation? Be sure to follow up with that info on time.

Subpar Service / Not Creating Super Fans

Think about this: which businesses do you recommend more? The ones where you had a good experience or those where you had a great one?

As tough as this may be to hear, most people aren’t thinking about your business as often as you are. They don’t wake up in the morning with the intention of sending you more referrals. They don’t think about how they can work it into a conversation.

When your clients refer your mortgage services, it’s because they had a positive experience. And if the experience was exceptionally delightful, clients will sing your praises over and over again.

That’s what we call a “superfan.”

Superfans are the gold standard of referral partners. They’re loyal, enthusiastic, and –while they don’t wake up every morning thinking about your business –they WILL make extra effort to reach more people on your behalf.

Haven’t Perfected The User Experience (UX)

Along the same thread, the best way to create a delightful experience is to make sure the user experience is on point. End-to-end, every touchpoint must simultaneously remove as much friction as possible plus elevate the experience.

If you are using any of our digital mortgage tools, you know that UX is foundational to our design. This isn’t just a marketing ploy. UX design influences behavior. Everything from where graphics are placed on the screen to how options are displayed to motivating users to complete tasks with prompts and maps has been scientifically studied and proven.

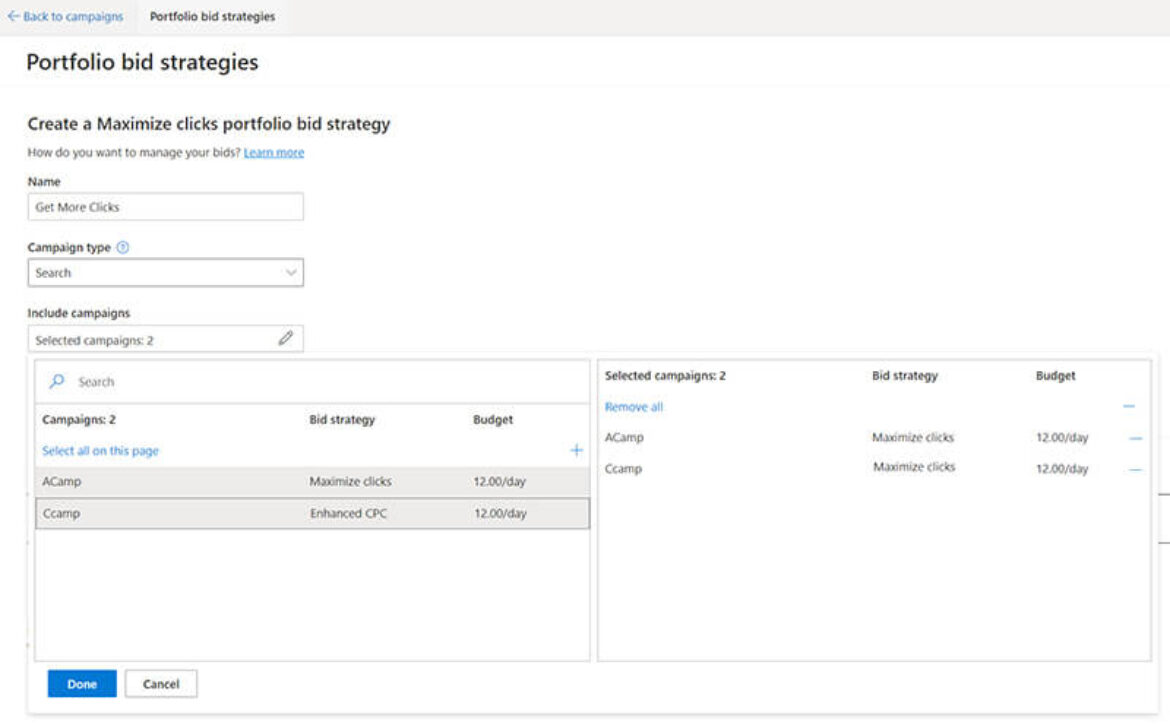

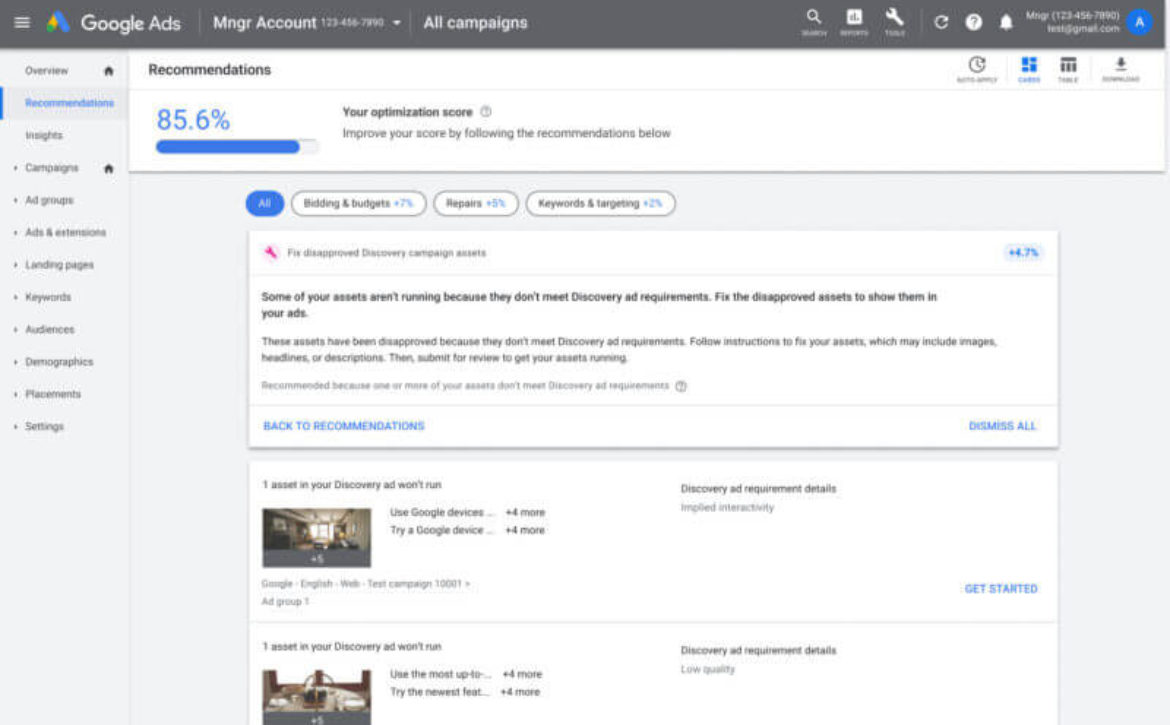

So if your goal is to create superfans (of course it is), then make sure you’re using mortgage software designed to create superfans. See the UX design of Loanzify POS:

Asking For The Wrong Referrals

It’s happened before. Probably too many times. You ask your client for a referral, they give you one, but nothing happens. Perhaps that person never returned your call or cut you off soon after the first communication. Or maybe they just we’re the right fit. It could be that you asked for the wrong referrals.

This is often the case when you ask the wrong questions. Typically, a loan officer may ask, “do you know anyone who could benefit from my services?” It seems like the right question to ask but the reply often is not honest. Here’s why.

Asking for a referral when they may not be ready to provide one (they haven’t entirely decided) puts them in a spot to give you one anyway. Since they haven’t truly committed to the idea of referring you, they’ll name someone they feel safe with or hold in low regard.

They do this to protect their reputation. If you happen to falter, the poor experience won’t reflect on them. Instead, be more specific with your request.

“Do you know anyone who is looking to relocate?”

“Are there any veterans in your family? Are they homeowners?”

“Are your parents of retirement age? I’d love to share information on how they can use their equity to supplement their retirement.”

Need more ideas for getting mortgage referrals and building a mortgage business in the digital age? Subscribe to our email list below and never miss an article.