How to test your content site strategy for continued improvement

Assessing the effectiveness of your content strategy is an integral part of growing your organic visibility as well as furthering your skills as an SEO. Techniques like forecasting can help you estimate the value a new piece of content or site change may generate for your business, but failing to record the real-life performance associated with those changes and comparing them with your forecasts may mean that you’re overlooking takeaways that can be used to improve future initiatives.

At SMX Convert, Alexis Sanders, SEO director at Merkle, shared the tactics that she uses to analyze site strategies for continued improvement. While the techniques mostly pertain to content, they can also be applied to other aspects of a site, like user experience.

Potential roadblocks to experimenting and testing your strategy

Experimentation can help you figure out what works best for your brand and its audience, but only if you’re able to ensure the integrity of your experiments and overcome the challenges associated with them.

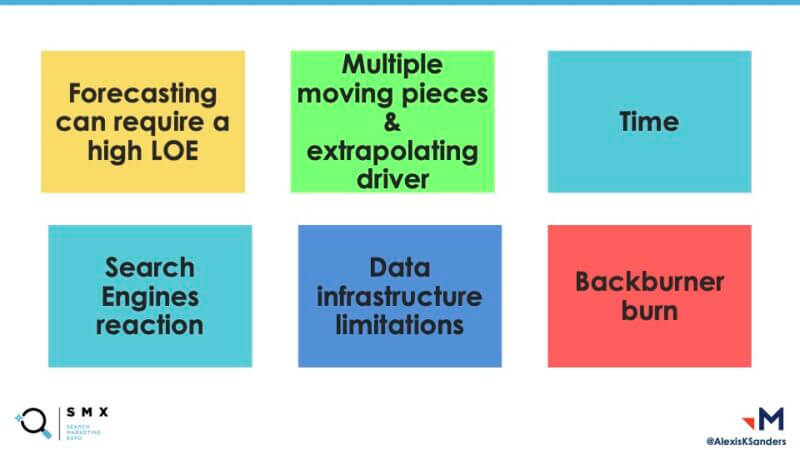

High level of effort. Forecasting can require a high level of effort, skill, comfort with analytics and time. You’ll also have to determine a model to work with and formulate an approach that ensures consistency. Fortunately, the effort involved may decrease over time: “You can become better at it, but it takes extra time and it takes time away from the action and so that’s one of the challenges that a lot of people have to face,” Sanders said.

Multiple moving pieces. Large sites also typically have many people working to implement different things across the site. An e-commerce site, for example, may have different teams dedicated to merchandising, inventory, updating pricing and development. “So, extrapolating a driver can be very challenging on a large site, and making sure to have that accurate record of what is actually going on is absolutely critical,” she said.

Time. In order to get an accurate depiction of how your changes are impacting your site or business, it’s crucial to record your findings over time. “How often are you going to be reporting on things? When are you ultimately going to see an effect?” Sanders asked, “One of the solutions that we’ve seen at Merkle is by recording at different times and reporting 15 days, 30 days, 60 days, 90 days after a particular event has occurred and making sure you maintain a record of that.”

Search engines’ reactions. How search engines respond to your content may also present an unexpected challenge: “You may think your content is the best content in the world,” Sanders said, “It may satisfy your users, but search engines, it is their prerogative to determine what is the actual best result … and so there’s a certain level of lack of control that we have over that variable.”

Data infrastructure limitations. “If you’re interested in whether or not users are clicking a specific button or going through a specific path, and you’re not actually recording that information, then you’re not going to be able to report on it,” she said. For those facing these limitations, it’s necessary to set up the appropriate tracking and analytics moving forward so that you can eventually compare data over time.

Backburner burn. With the various action items that SEOs have to tend to on a regular basis, clearing some time in your schedule to report and communicate issues can be difficult. Nevertheless, it’s something that should be prioritized: “If it’s work that you’re doing, if it’s things that can make you more valuable within your organization, it’s something that should be prioritized for both your career and for future SEOs,” Sanders said.

Analyzing the effectiveness of new content

To determine how your audience will perceive your content, “We’re ultimately going to have to rely on is forecasting, to start off with, because that’s going to be our hypothesis,” said Sanders. For this, she recommends relying on historical data, such as case studies and competitor insights, and findings from qualitative tests, which you can conduct on a sample of your target audience.

Case studies. Sanders recommends maintaining a record of your internal case studies and links to relevant articles about case studies for initiatives that you may want to attempt in the future. Accumulating all this information will help you get a better idea of how your proposed content may perform. “Potential KPIs that we’ll be looking at may include revenue, traffic, visibility metrics like impressions, rankings and user engagement metrics,” she added.

Competitive research. Competitors’ rankings, estimated traffic (which may be correlated to rankings) and search volume are going to be the most useful metrics for forecasting. Tools like Semrush, Ahrefs, BrightEdge, Conductor, AWR and Similarweb can provide you with insights on how your competitors are performing. “This can be really useful if you want to do a specific initiative that is sort of similar to what a competitor is doing,” Sanders said, “You can see how they’re currently performing and then guess whether or not you will outperform them in certain areas based on what you’re actually going to be doing and your level of authority within the space.”

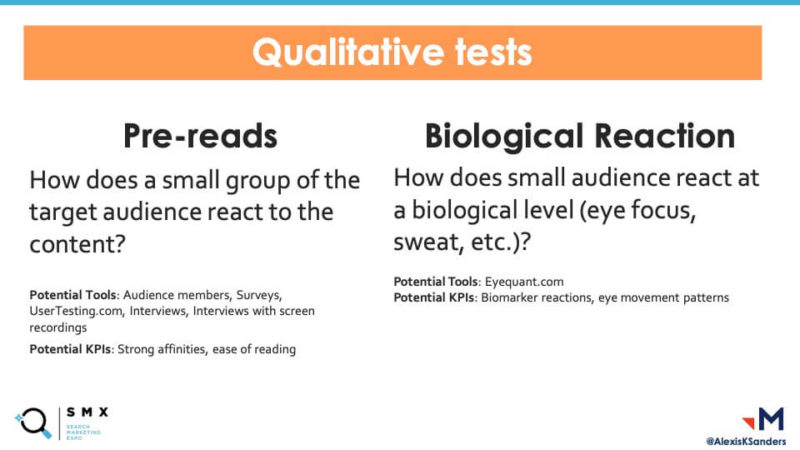

Pre-reads. A pre-read is when you ask a small group that represents your target audience to react to a piece of content. You can interview the group members for their opinions or provide them with surveys. Interviews with screen recordings “can be particularly useful if you’re looking at a site in general, or a functionality, but you can also use it for content,” Sanders said, adding, “What you’re looking for is: Do people have strong opinions about things? Do they have a strong affinity towards a piece of content? What is the ease of reading with this piece of content? Is it accessible to people? Those can be really useful for providing initial feedback and refining what you’re actually going to do with this new piece of content.”

Biological reactions. If your content deals with tough topics, observing your sample audience’s biological reactions, such as eye movement patterns and perspiration, can also be helpful for testing your content strategy. Tools like EyeQuant can help you document how users respond to your content.

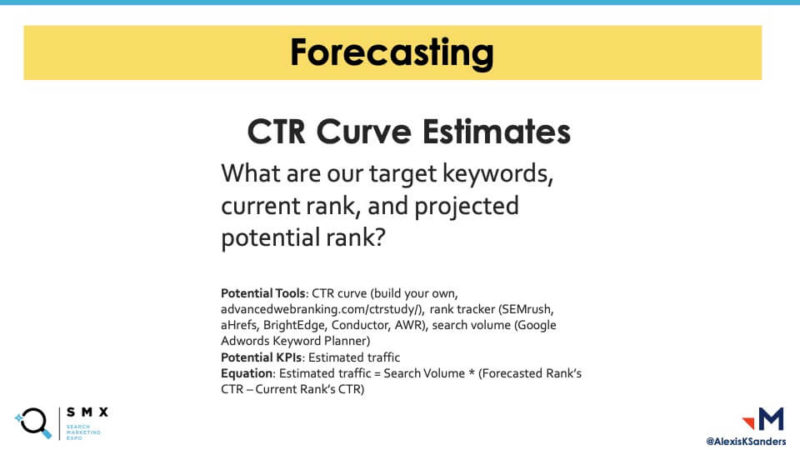

Forecasting. Clickthrough rate curve estimates can help you predict incremental traffic lift. “We [calculate] that using the search volume multiplied by the clickthrough rate of our forecasted rank subtracted by our current rank,” Sanders said.

Estimated traffic lift = Search Volume x (Forecasted Rank’s CTR – Current Rank’s CTR)

“This can be really useful if you’re trying to show potential for ranking for certain keywords or keyword sets, or if you’re looking at a large group of keywords, you can just say what would be our incremental value if we increase by one ranking on all these keywords,” she added.

Quantifying the search value of content updates

Trends data.Sometimes it makes more sense to update existing content rather than start from scratch. In this scenario, trends data can also be used to predict performance (in addition to the above-mentioned clickthrough rate curve estimates).

To utilize historic trend data to create forecasts, “We would take a certain model — like Facebook Prophet, a machine learning model, whether it’s linear regression, a SARIMA model, a long/short-term memory recursive neural network machine model — and we would use that by feeding it time-series data of what we currently have of any metric (revenue, traffic, impressions, whatever you want to feed it) and that time series would project out into the future,” Sanders said. Those projections can be combined with findings from your internal case studies to give you an idea of how your content will perform.

Split testing. In certain scenarios, such as testing UX changes, split testing can help you find out what works best to facilitate your users and increase conversions. “For SEO, generally, we don’t want to have duplicate content, so if you’re introducing more than one URL, what you’re going to want to do is ensure that the bots are getting the appropriate experience,” she said, adding, “Or just don’t change the URL at all — ultimately, preserving the URL is generally going to be the best course of action.”

If there is the potential for search engine bots to find one of your alternative experience URLs, it’s best to 302 redirect to the main URL or simply exclude the bot if the test is over a short period of time.

In terms of analysis, user engagement metrics can tell you if users are taking the actions you want them to and whether they’re doing so efficiently (compared to the control experience). Tools like Google Analytics can reveal how your new experience compares in terms of bounce rate, time on page and so on.

Launch and learn. This approach is as simple as it sounds — just launch the proposed change and see what happens.

“A lot of times when you launch, you can basically look at any metric that you’re recording,” Sanders said, “Some of the core ones are going to be the user engagement metrics: How are users acting on your site? Are they completing actions that you want them to do, like clicking buttons, completing forms, are they staying on the site appreciably long?”

The KPIs to look at include revenue, conversions, traffic, visit sessions, organic clicks and impressions, ranking information and so on. Social media engagement can also tell you whether your audience is engaging with a piece of content.

Best practices for experimenting and testing your strategy

“The number one tip I have for you is to maintain an accurate record of events,” Sanders said, “Significant updates, good and bad, are absolutely critical to record — it is what allows us to have indifference towards the results and it’s something that also allows us to quantify risk later on.”

Likewise, it’s important to record site updates or anything else that happens on the site that may affect performance. Industry news and seasonality are also factors worth making note of, as well as search industry-related news, like core algorithm updates that may impact rankings.

As a final piece of advice, Sanders touched on the mindset necessary to conduct experiments that yield trustworthy results: “Be open to qualitative feedback and insights from your core audience members, they may tell you things that you didn’t even think about, which can be really useful for refining a new piece of content or modifying an existing one.”

“Results indifference is absolutely critical to refining your understanding,” she emphasized, “So, make sure you go into it with a lack of caring as to whether or not the content performs but more interest in learning what you can from that.”

Want to see the whole session? Sign up to watch the entire SMX Convert learning journey on-demand.

The post How to test your content site strategy for continued improvement appeared first on Search Engine Land.