Our Guide to A/B Testing for Core Web Vitals explained a series of small steps with two services and a browser extension to write tests for frontend code tactics. Thirty years ago, we would copy a page’s raw source to run find-and-replace operations until we could manage a facsimile of a page put in a web-enabled folder to demonstrate the same kinds of recommendations.

We don’t have to do that anymore.

Setting up a reverse proxy and writing software for conducting SEO twenty years ago was limited to a small set of companies that built and hosted the infrastructure themselves. Cloudflare now provides us with a turnkey solution. You can get up and running using a free account. To change frontend code, use Cloudflare’s HTMLRewriter() JavaScript API.

The code is relatively easy to comprehend.

With Core Web Vitals, it’s the immediacy, the perceived need and the rapidity of being able to cycle through varying tests that ultimately shows value and really impresses. The fundamental platform is available to you through the steps outlined in our guide. We’ll write functions for making commonplace changes so that you can begin testing real tactics straight away.

HTMLRewriter()

If you’ve been following along, you may know our script provides the option to preload an element that you can specify in a request parameter for LCP. We return a form when the value is missing, just to make it easy to add your reference. There is also a placeholder for something called importance, which we’ll be addressing as well. What’s important is to understand what we’re going to do.

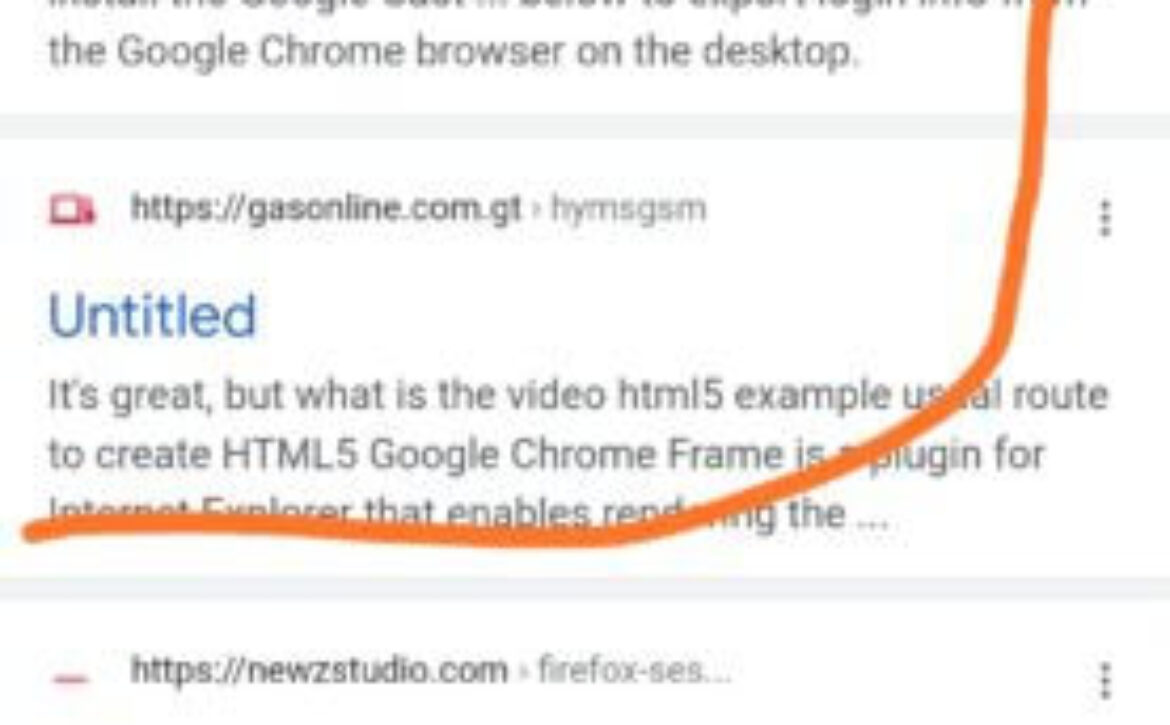

The HTMLRewriter() API gives us the ability to use jQuery-style element selectors to attach to HTML elements in raw page source to run JavaScript from that foothold. You’ll be able to modify elements, a whole group of elements or even the base document in powerful ways. You can edit a page’s title, for example. In production, your edit becomes the title and is what gets indexed at Google and Bing.

One complication you will encounter is that you can only edit raw source, not a hydrated Document Object Model (DOM). One quick way to view raw source is with the browser’s built-in view-source functionality. With Firefox, view-source highlights validation errors in red, for example. Even when browsers “fix” broken HTML, this can usually be fixed with our Worker.

Working inside DevTools, the “Sources” tab provides access to raw source. Use preference settings to always “pretty print” source, which will format it so you can scan the code to look for optimizations. Another preference tip is a setting to bypass cache when DevTools is open. This workflow will help you as you go so your optimizations don’t result in reference errors.

Element Selectors

When you spot something you want to fix with HTMLRewriter(), you’re going to need to narrow changes and isolate the element to avoid altering more code than you intend. Use the most exclusive selector possible, which can be very easy when elements have unique IDs. Otherwise, find a tell-tale sign, such as a reference to a unique location in href or src attributes.

You will find the ability to use wildcards and “command mode” vim-style regular expressions matching attribute values. You can also supply more than one criteria, even with the same attribute name. Use your vim powers to narrow matches to single elements, or match a group of elements with broader expressions. Logic can then separate concerns between changes.

Example matching wildcard “fonts.g” prefetch link elements to remove those for: fonts.googleapis.com.

.on(`link[rel="dns-prefetch"][href*="fonts.g"]`, removeEl())

Example showing two matches for the href attribute, narrowing it a single file among many.

.on('link[href^="https://example.com/static/version"][href$="/print.css"]', unblockCSS())

The first example above uses that wildcard match where the string “fonts.g” can appear anywhere in the href attribute of link elements. It’s an example for a broad match that might attach to more than one link element for an appropriate action, like removing the element(s) that match, if any.

The second example from above shows how you can select a particular link element that starts with a string, and ends with another string, but which can have anything between. This is useful for selecting a single element that is part of a build system whereby there may be a versioning token directory for browser cache-busing that is dynamically named.

Link elements

Link elements are multifaceted by virtue of their several attributes. Thus, they can serve a number of purposes. Not to be confused with links (as in anchors), link elements are typically where you start looking for quick-hitting performance strategies. Some preload and preconnect link elements may be actually getting in the way or maybe entirely unnecessary.

You only get maximum six hosts to connect simultaneously. Your first strategy will be to make the most of them. Try removing all priority hint link element statements and test the result. If timings go the wrong way, then add them back one at a time and test the real impact of each. You’re going to need to learn how to read the WebpageTest waterfall chart in-depth.

Following this, tactics go to resource loading, which also involves link elements pretty heavily, but not exclusively. At this point, we want to look at scripts as well. The order in which resources load can affect things very negatively. Our testbed is perfect for trying various tactics gleaned from reading the waterfall chart. Keep the console drawer of DevTools open to check for errors as you work.

Removing elements

Removing elements is exceptionally simple to do. Once you’ve selected an element, or a group of them, the next field in HTMLRewriter().on() statements is where you write a script block. You can do this in place with curly braces. You can reference a named function. Or you can build a new class instance for an object defined earlier, which in this context, may be over-engineering.

When you encounter sample Worker code you may see class initializers. All that’s really needed to remove and element is the following function. Anything done with a named class object can be done with a plain function (object) using less code, for fewer bugs, with more readable syntax and far more teachable. We’ll revisit class constructors when we delve into Durable Objects.

element: (el) => { el.remove(); }

In a nutshell, this block defines a variable “el” in reference to the element instance and the code block calls the built-in remove() element method, which you will find detailed in the corresponding documentation. All HTMLRewriter() element methods are available to you for use with instances of your element matches. Removing elements is one of the simpler ones to comprehend.

Unblocking render blocking resources

Unblocking script elements is much easier than unblocking stylesheet resources. As luck would have it, we have a boolean attribute for signaling the browser that we want to asynchronously load a script or defer it altogether (for when there is idle time). That’s ideal! Stylesheets, on the other hand, need a little “hack” to get them unblocked — they requires some inline Javascript.

Essentially, we turn a stylesheet link element reference into preload to unblock it. But that changes the nature of the link element to one where the style rules will not get applied. Preload downloads resources to store them in local cache, ready for when needed, but that’s it. DevTools warns you when a resource is preloaded and not used expediently — that’s when you know you can remove it!

Preloading and then using an onload attribute to run JavaScript to change it back from preload to stylesheet is the CSS “hack” to unblock what otherwise is a naturally render blocking resource. Using JavaScript’s this keyword allows you to change its properties, including the rel attribute (and the onload attribute itself). The pattern has a backfill for non-JavaScript sessions, as well.

Here is our unblockCSS() function which implements the strategy using ready-made element methods.

const unblockCSS = () => ({

element: (el) => {

el.removeAttribute('media');

el.setAttribute('rel', 'preload');

el.setAttribute('as', 'style');

el.setAttribute('onload', "this.onload=null;this.rel='stylesheet';this.media='all'");

el.after(`

<noscript><link rel="stylesheet" href="${el.getAttribute("href")}"></noscript>

`, { html: true }); }});

Select the link element stylesheet references that are render blocking and call this function on them. It allows the browser to begin downloading the stylesheet by preloading it. Once loaded, the rel attribute switches back to stylesheet and the CSS rules get immediately applied. If style problems occur after this change, then one or more sheets need to load in normal request order.

The function acts as a reusable code block. Toggle your element selections using HTMLRewriter() and test the difference unblocking CSS sheets one at a time, or in groups, depending on your approach. Utilize the tactic to achieve an overall strategy unblocking as much as you can. However, always remember to look for problems resulting from changes to CSS and Script resources.

Script priorities

The order in which you load styles can botch the design. Unexpectedly fast-loading stylesheet rules will overwrite ones more sluggishly loaded. You also have to watch while loading scripts in alternate order so that they get evaluated and are resident in memory when the document is evaluated. Reference errors can cascade to dozens or hundreds of script errors.

The best way to check for problems is to watch the console drawer and simulate slow network connections. This can exaggerate problems to the point they should be evident in DevTools. If script resources are processed using more powerful CPUs and load over cable modem speed, or faster, it is possible you’ll miss a critical error. Requests get nicely spaced out, as well.

Here is our function changing, or adding, async and defer attributes.

const makeAsyncJS = () => ({

element: (el) => {

el.removeAttribute("defer");

el.setAttribute("async", "async");

}

});

const makeDeferJS = () => ({

element: (el) => {

el.removeAttribute("async");

el.setAttribute("defer", "defer");

}

});

If a script doesn’t originally have async or defer, it’s harmless to run the removeAttribute() element method for a more reusable code block. You can safely disregard this if you’re working quickly on a one-off project where you might be writing this inline rather than calling a function you defined previously in the script.

Alt attributes for SEO

As mentioned, our Guide to A/B Core Web Vitals tactics was, by design, meant for us to have a fully functioning Edge Computing testbed up and running to demonstrate content with future SEO for Developers articles and future events. During our SMX West event last year (2021), we demonstrated using Cloudflare Workers for a website, achieving Lighthouse fireworks (scoring 100 across all its tests).

There are lots of things which need to be in place to get the fireworks. One important aspect is that all images must have valid alt attributes. The test can detect when the text in in alt attributes are “nondescript,” or present, but empty. You need words that depict what’s in the associated image. One way to do that might be to parse the file name from the src attribute.

Here is a function that extracts text from img src attributes to power alt text from filenames with hyphens.

const img_alt = element.getAttribute('alt');

const img_src = element.getAttribute('src');

if (!img_alt) {

element.setAttribute('alt', img_src.replace('-', ' '));

}

In a nutshell, this will look for the condition on images where there is no alt attribute value. When there’s a likelihood its src attribute filename is hyphenated, it will replace hyphens with spaces to formulate what may be a suitable value. This version won’t work for the majority of cases. It doesn’t replace forward slashes or the protocol and domain. This merely serves as a starting point.

Why we care

Having a testbed for trying out various Core Web Vitals Performance Optimization tactics is incredibly impressive to site owners. You should have this capability in your agency arsenal. A slight Google rankings boost with good scores is both measurable and largely achievable for most sites through tactics we will discuss and demonstrate. Tune in for a live performance March 8-9th.

SEO technicians have long recommended performance improvements for search engine ranking. The benefit to rankings has never been clearer. Google literally defined the metrics and publishes about their effect. We have Cloudflare Workers to implement Edge SEO remedies, as demonstrated here with alt attributes for images. Our reverse proxy testbed by virtue of Cloudflare sets the stage for rich communication with developers.

The post Functions for Core Web Vitals Tactics with Cloudflare’s HTMLRewriter appeared first on Search Engine Land.