Google December 2021 product reviews update is finished rolling out

Google has confirmed that the December 2021 product reviews update is now finished rolling out. This update has officially completed rolling out a few days before Christmas.

The announcement. “The Google product review update is fully rolled out. Thank you!” Google’s Alan Kent wrote on Twitter.

December 2021 product reviews update. As a reminder, the December 2021 product reviews update started to roll out at about 12:30pm ET on December 1, 2021. This update took 20 days to roll out after it was announced. So this update started on December 1, 2021 and lasted through December December 21, 2021.

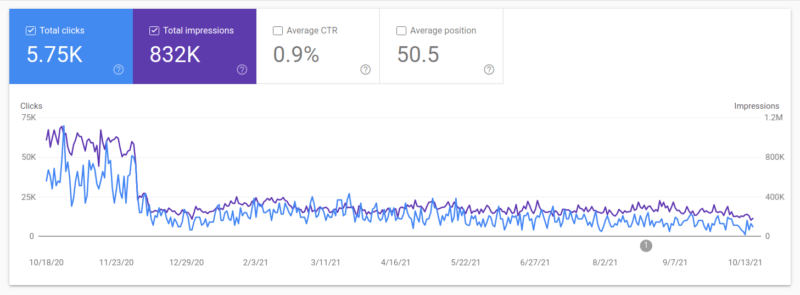

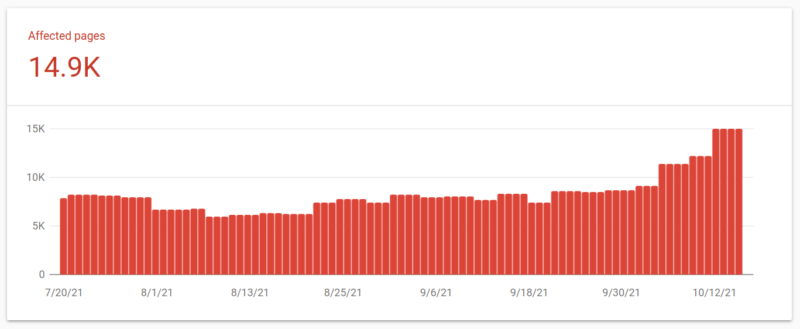

When and what was felt. Based on early data, this update was not a small update. It was bigger than the April 2021 product reviews update but also seemed to continue to remain pretty volatile throughout the whole rollout. The community chatter and tracking tools were all at pretty high levels consistently for the past few weeks.

Why we care. If your website offers product review content, you will want to check your rankings to see if you were impacted. Did your Google organic traffic improve, decline or stay the same?

Long term, you are going to want to ensure that going forward, that you put a lot more detail and effort into your product review content so that it is unique and stands out from the competition on the web.

More on the December 2021 products reviews update

The SEO community. The December 2021 product reviews update, like I said above, was likely felt more than the April version. I was able to cover the community reaction in one blog post on the Search Engine Roundtable. It includes some of the early chatter, ranking charts and social shares from some SEOs. In short, if your site was hit by this update, you probably felt it in a very big way.

What to do if you are hit. Google has given advice on what to consider if you are negatively impacted by this product reviews update. We posted that advice in our original story over here. In addition, Google provided two new best practices around this update, one saying to provide more multimedia around your product reviews and the second is to provide links to multiple sellers, not just one. Google posted these two items:

- Provide evidence such as visuals, audio, or other links of your own experience with the product, to support your expertise and reinforce the authenticity of your review.

- Include links to multiple sellers to give the reader the option to purchase from their merchant of choice.

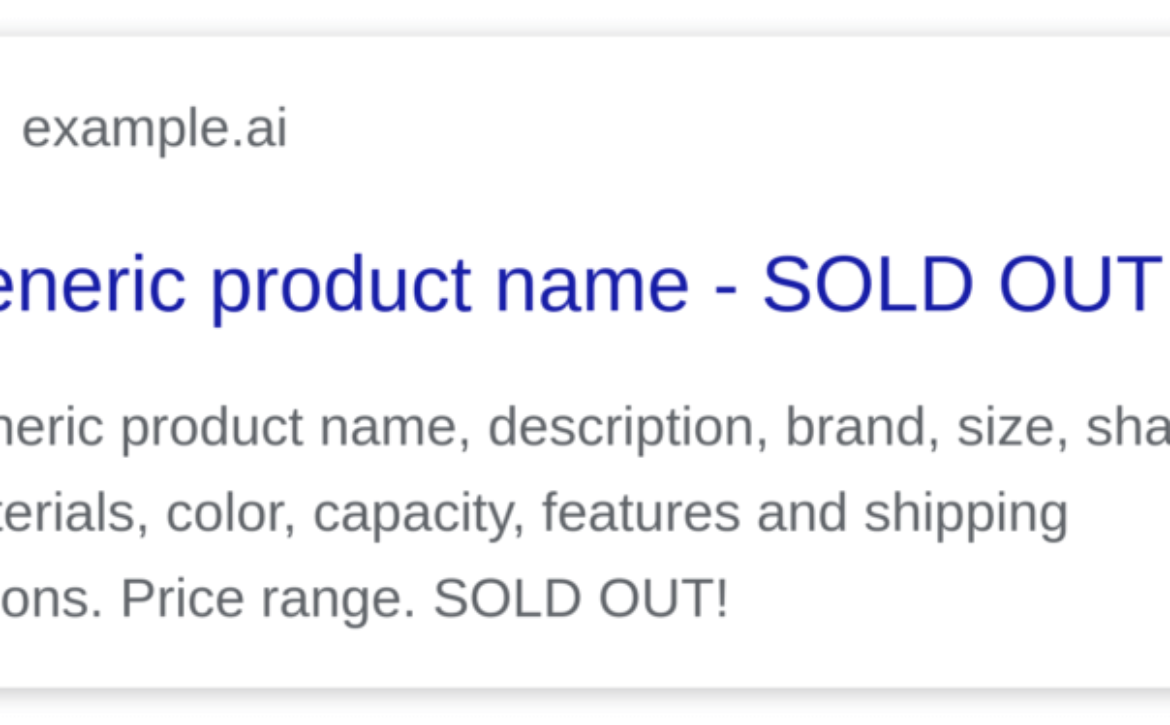

Google product reviews update. The Google product reviews update aims to promote review content that is above and beyond much of the templated information you see on the web. Google said it will promote these types of product reviews in its search results rankings.

Google is not directly punishing lower quality product reviews that have “thin content that simply summarizes a bunch of products.” However, if you provide such content and find your rankings demoted because other content is promoted above yours, it will definitely feel like a penalty. Technically, according to Google, this is not a penalty against your content, Google is just rewarding sites with more insightful review content with rankings above yours.

Technically, this update should only impact product review content and not other types of content.

More on Google updates

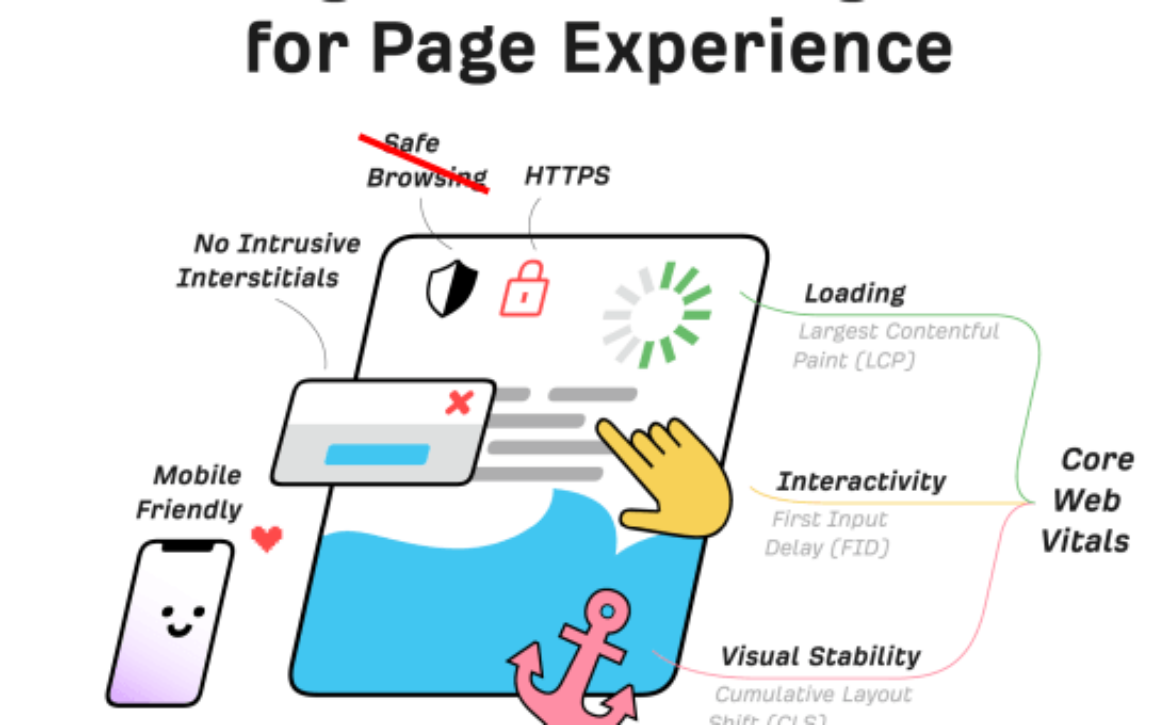

Other Google updates this year. This year we had a number of confirmed updates from Google and many that were not confirmed . In the most recent order, we had: The July 2021 core update, Google MUM rolled out in June for COVID names and was lightly expanded for some features in September (but MUM is unrelated to core updates). Then, the June 28 spam update, the June 23rd spam update, the Google page experience update, the Google predator algorithm update, the June 2021 core update, the July 2021 core update, the July link spam update, and the November spam update rounded out the confirmed updates.

Previous core updates. The most recent previous core update was the November 2021 core update which rolled out hard and fast and finished on November 30, 2021. Then the July 2021 core update which was quick to roll out (kind of like this one) followed by the June 2021 core update and that update was slow to roll out but a big one. Then we had the December 2020 core update and the December update was very big, bigger than the May 2020 core update, and that update was also big and broad and took a couple of weeks to fully roll out. Before that was the January 2020 core update, we had some analysis on that update over here. The one prior to that was the September 2019 core update. That update felt weaker to many SEOs and webmasters, as many said it didn’t have as big of an impact as previous core updates. Google also released an update in November, but that one was specific to local rankings. You can read more about past Google updates over here.

The post Google December 2021 product reviews update is finished rolling out appeared first on Search Engine Land.