We’re turning off AMP pages at Search Engine Land

“Gasp! Think of the traffic!”

That’s a pretty accurate account of the more than two dozen conversations we’ve had about Search Engine Land’s support of Google’s Accelerated Mobile Pages in the past few years. At first, it was about the headache in managing the separate codebase AMP requires as well as the havoc AMP wreaks on analytics when a nice chunk of your audience’s time is spent on an external server not connected to your own site. But, Google’s decision to no longer require AMP for inclusion in the Top Stories carousels gave us a new reason to question the wisdom of supporting AMP.

So, this Friday, we’re turning it off.

How we got here

Even when Google was sending big traffic to AMP articles that ranked in Top Stories, the tradeoff had its kinks. For a small publisher with limited resources, the development work is considerate. And not being able to fully understand how users migrated between AMP and non-AMP pages meant our picture of return and highly engaged visitors was flawed.

But, this August we saw a significant drop in traffic to AMP pages, suggesting that the inclusion of non-AMP pages from competing sources in Top Stories was taking a toll.

Our own analytics showed that between July and August we saw a 34% drop in AMP traffic, setting a new baseline of traffic that was consistent month-to-month through the fall.

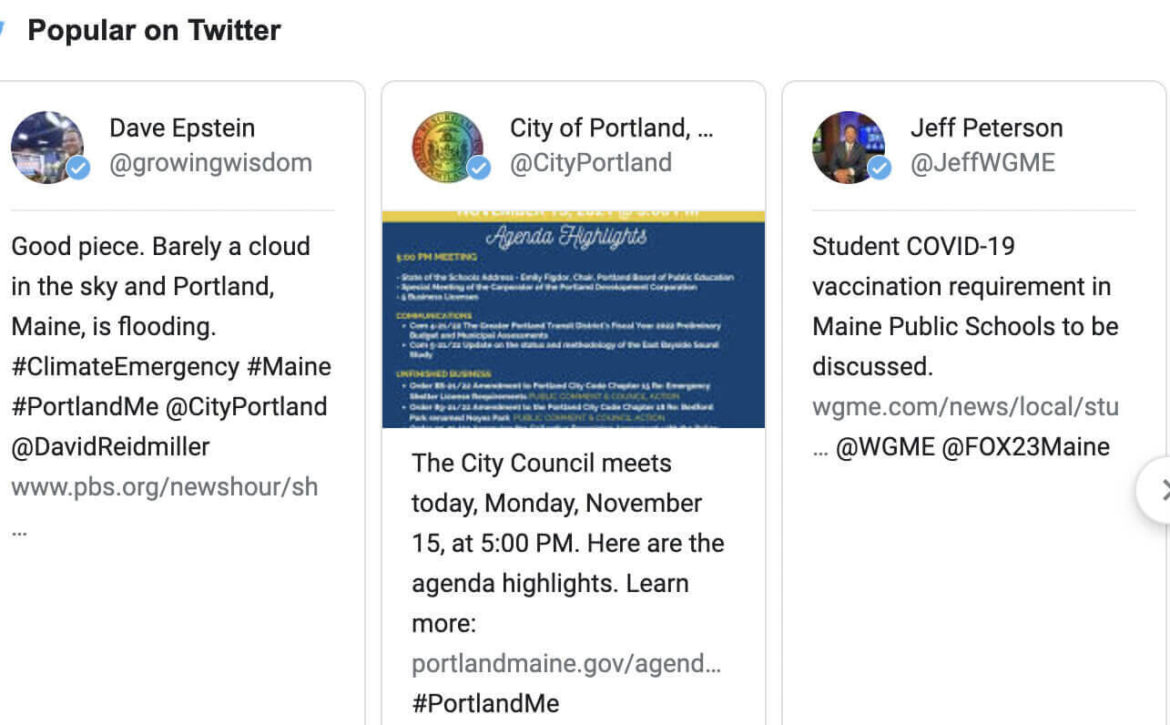

This week we also learned that Twitter stopped referring mobile users to AMP versions, which zeroed out our third-largest referrer to AMP pages behind Google and LinkedIn. We’ve seen LinkedIn referrals fall as well, suggesting that when November ends, we’ll be faced with another, lower baseline of traffic to AMP pages.

Publishers have been reluctant to remove AMP because of the unknown effect it may have on traffic. But what our data seemed to tell us was there was just as much risk on the other side. We could keep AMP pages, which we know have good experience by Google standards, and their visibility would fall anyway due to competition in Top Stories and waning support by social media platforms.

We know what a road to oblivion looks like, and our data suggests AMP visibility is on that path. Rather than ride that to nowhere, we decided to turn off AMP and take back control of our data.

How we are doing it

“If you are ready and you have good performance of your mobile pages, I think you should start testing.” That’s what Conde Nast Global VP of Audience Development Strategy John Shehata told attendees at SMX Next this month when asked about removing AMP.

Shehata suggested a metered strategy that starts with removing AMP on articles after seven days and then moves on to removing AMP on larger topical collections.

“If all goes well, then go for the whole site,” he said “I think it’s gonna be better in the long run.”

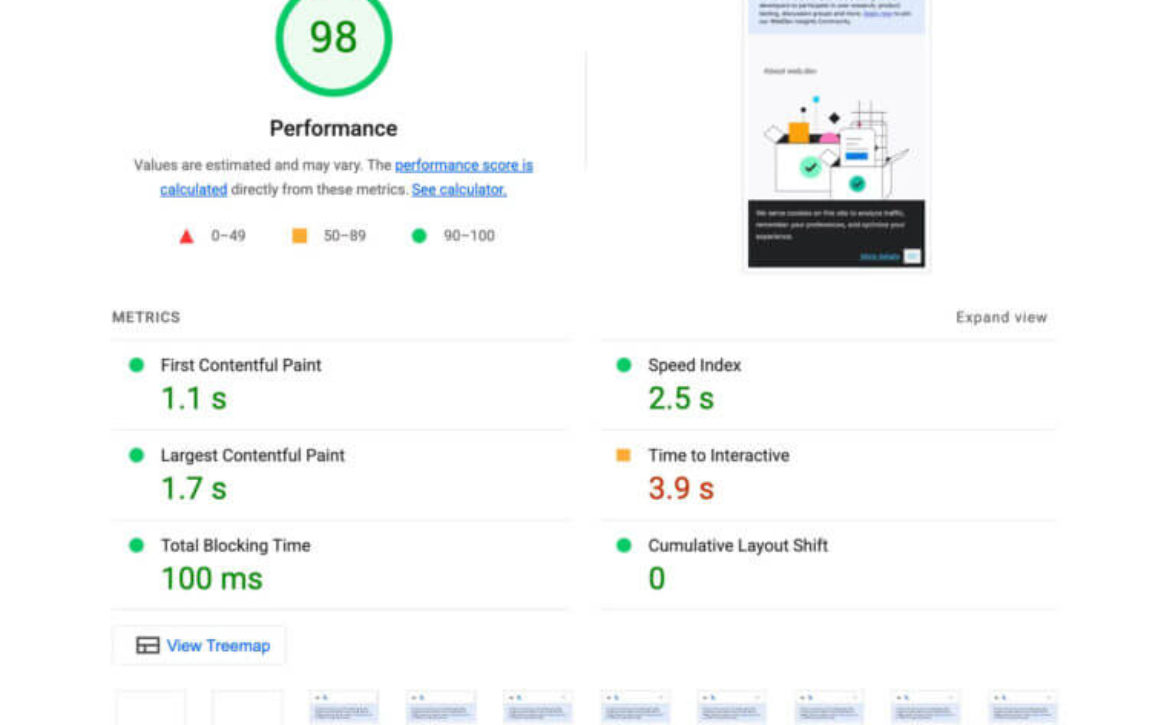

That, of course, hinges on the speed and experience of your native mobile pages, he said.

The Washington Post, which is still listed as an AMP success story on the AMP Project site, actually turned off AMP a while back, said Shani George, VP of Communications at the Post.

“Creating a reading experience centered around speed and quality has long been a top priority for us,” she added, pointing us to an extensive write-up its engineer team published this summer around its work on Core Web Vitals.

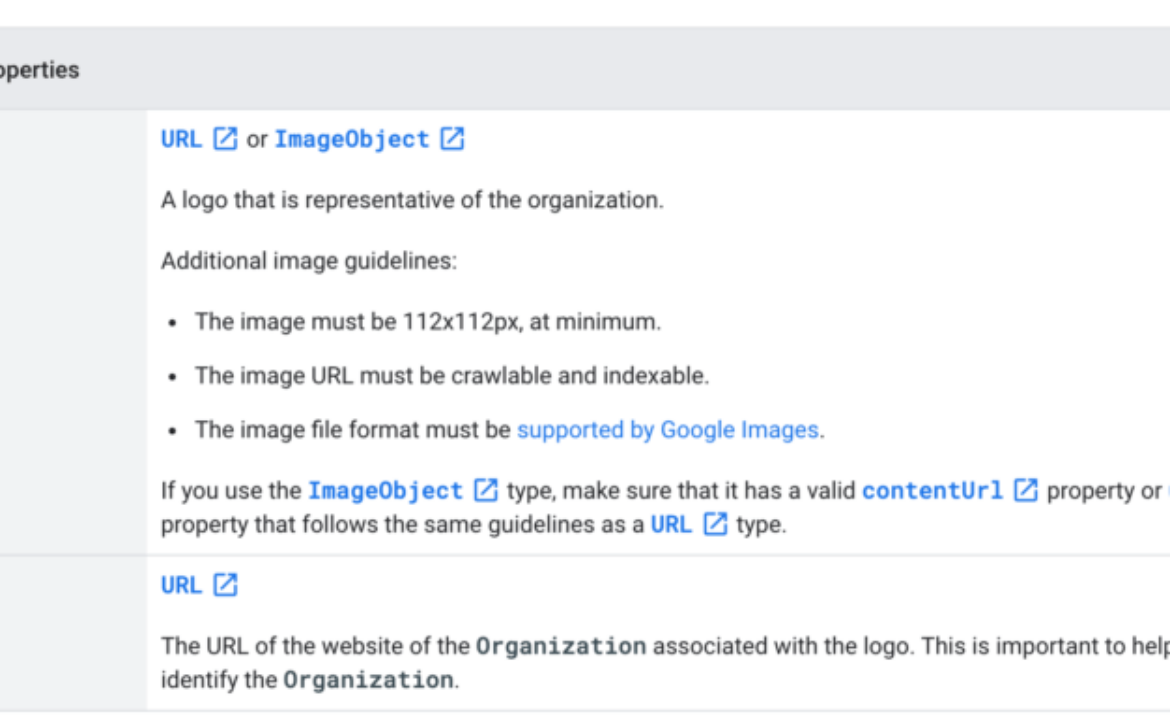

Because we are a smaller, niche publisher, our plan is to conserve our resources and turn off AMP for the entire site at once. Our core content management system is WordPress, and AMP is currently set for posts only, not pages. But that includes the bulk of our content by far.

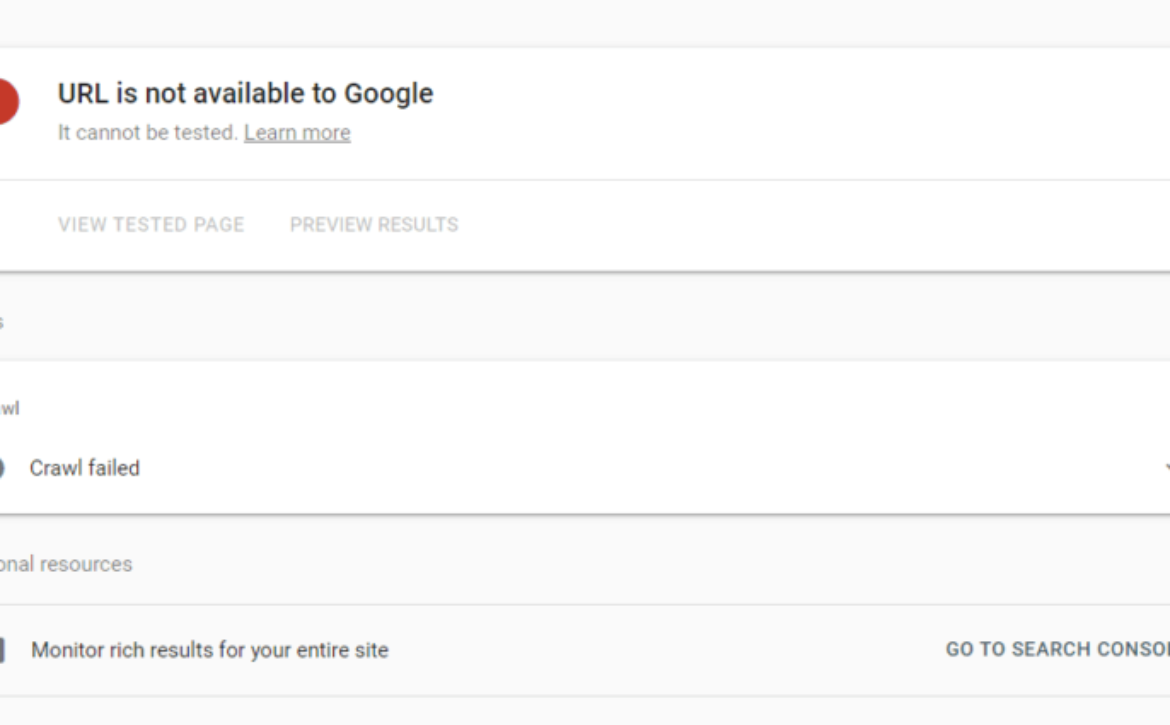

Our plan is to use 302 redirects initially. This way we’re telling Google these are temporary, and there won’t be any PageRank issues if we turn them off (or replace them with 301s). We’ll then see how our pages are performing without AMP. If there’s no measurable difference, we’ll then replace those 302 redirects with permanent 301 redirects. The 301s should send any PageRank gained from the AMP URLs to their non-AMP counterparts.

Of course, if the worst-case scenario happens and traffic drops beyond what we can stomach, we’ll turn off the 302 redirects and plan a different course for AMP.

It’s a risk for sure. Though we have done a considerable amount of work to improve our CWV scores, we still struggle to put up high scores by Google’s standards. That work will continue, though. Perhaps the best solace we have at this point is many SEOs we’ve spoken to are having trouble seeing measurable impacts for work on CWV since the Page Experience Update rolled out.

Maybe it’s not about traffic for us

The relationship between publishers and platforms is dysfunctional at best. The newsstands of old are today’s “news feeds” and publishers have been blindsided again and again when platforms change the rules. We probably knew allowing a search platform to host our content on its own servers was doomed to implode, but audience is our lifeblood so can you blame us for buying in?

We also know that tying our fates to third party platforms can be as risky as not participating in them at all. But when it comes to supporting AMP on Search Engine Land, we’re going to pass. We just want our content back.

The post We’re turning off AMP pages at Search Engine Land appeared first on Search Engine Land.

John

John