SERP trends of the rich and featured: Top tactics for content resilience in a dynamic search landscape

These days, there is a lot going on at the top of the SERP. New features, different configurations, variations for devices and challenges for specific verticals pop up every day. Seasoned SEOs will tell you that this is ‘not new’, but the pace of change can sometimes pose a challenge for clients and webmasters alike.

So how do you get ahead of the curve? How do you make your site better prepared for possible new SERP enhancements and make better use of what’s available now? I’ve outlined 4 potential strategies for SERP resilience in my session at SMX Advanced:

1. Prepare to share (more)

From Featured Snippets and Google for Jobs to Recipe Cards and Knowledge Graphs, Google is unceasing in its efforts to create more dynamic user-pleasing SERPs. This is great for users because Google can serve lighting fast results that are full of eye-catching information that is easy to navigate even on the smallest mobile screen. And with the range of search services available for a query – Google Lens, Google Maps, Google Shopping to name but a few – the granularity of Google’s ability to provide information at the most crucial point of need is immense.

For SEOs, this means that it’s becoming increasingly rare to rank exclusively in the top spot of a given query. Even without ads, and even with the Featured Snippet, the top results can often include a mix of links, videos, and/or images from different domains.

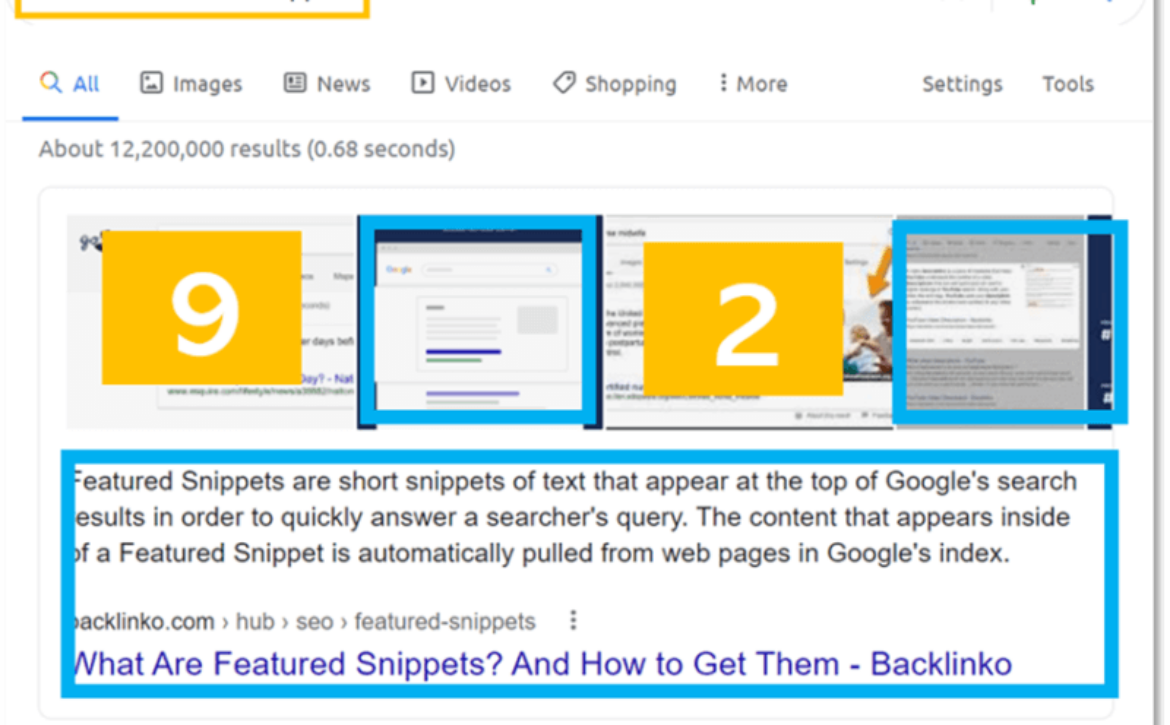

Have a look at this Featured Snippet for the query “What is a Featured Snippet”.

Here, the main paragraph and blue link come from Backlinko site, but there are four linked images in the carousel before you get to the text. And only two of them are from Backlinko’s page, the others are from pages ranking 9th and 2nd on the main SERP page. So, while some tools would report the paragraph snippet result as ranking “first”, from a user perspective the text result is the fifth clickable link.

And while this scenario is not new, it does illustrate something that we are seeing more regularly and in more complex configurations.

For instance, in the query for 50 Books to Read Before You Die, Google is serving a host of results ‘From sources across the web’ in an accordion. Then within each drop-down is a carousel of results that includes web pages and bookmarked YouTube videos.

That means that plain blue links aren’t visible until after a row of ads and then after 20+ links from the accordion carousel.

This presents both challenges and opportunities for SEOs.

Strategic challenges from mixed SERPs

For those who are looking to protect traffic, the challenge is to ensure that you are offering users a means of connecting with your content via multiple forms of media and mediums. Relying on a single content type (written blog without images for instance) could leave your traffic vulnerable to changes in the SERP. So, a strategic approach to your most important SERPs should include a mix of written, video and/or image content. This will ensure that you are optimized for how users are searching, as well as what they are searching for.

Strategic opportunities from mixed SERPs

For sites looking to gain traffic from established rivals, top SERPs with multiple site links present an opportunity to gain precious ground by optimizing for search services that your rivals are ignoring. So as well as looking for keyword gaps, make sure your content plan is looking for gaps in media formats.

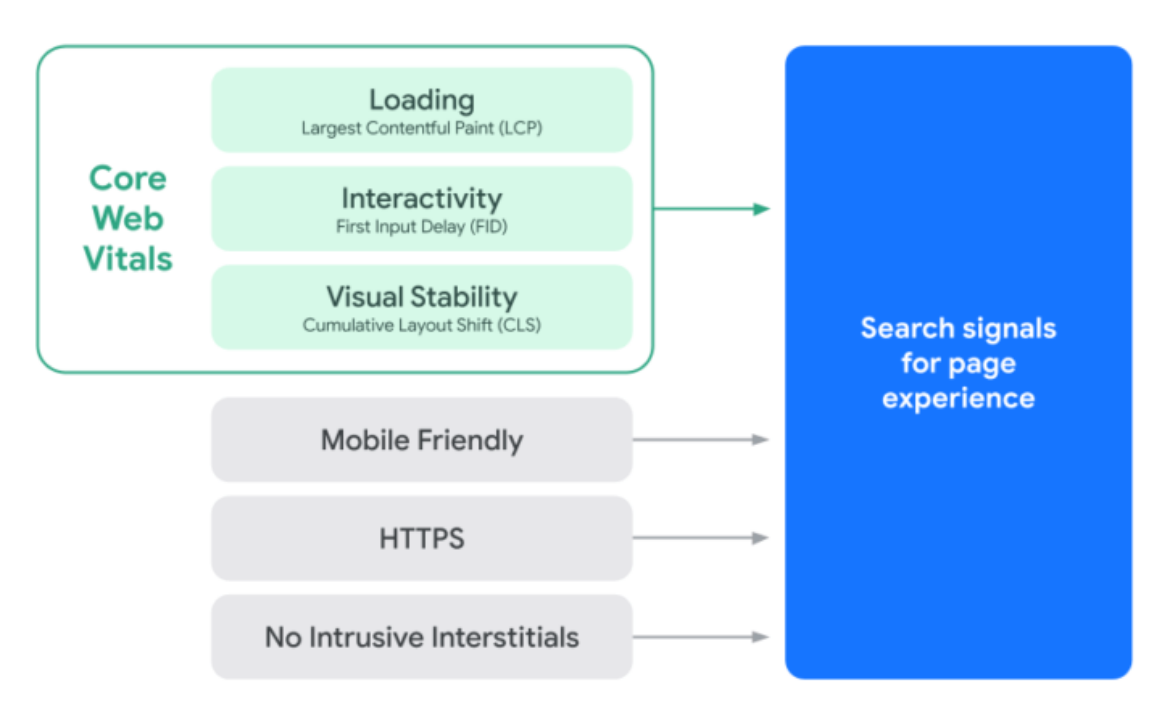

Use a good technical SEO framework

In both cases, the multi-media content you develop should be underpinned by sound technical infrastructure, like a good CDN, image sitemaps for unique images, structured data, and well-formatted on-page SEO.

2. Invest in knowledge hubs

In Nov 2020 and Jan 2021, Google Tested Featured Snippet Contextual Links which added reference links to other websites from within the Featured Snippets. Then May 21 Google “bug” showed Featured Snippets that included links to further searches in Google.

While Google has yet to outline any specific plans to roll this out as standard, they have been known to test new SERP features on live results in the past. For instance, they were testing image carousels with Featured Snippets in 2018 before the wider rollout in 2020.

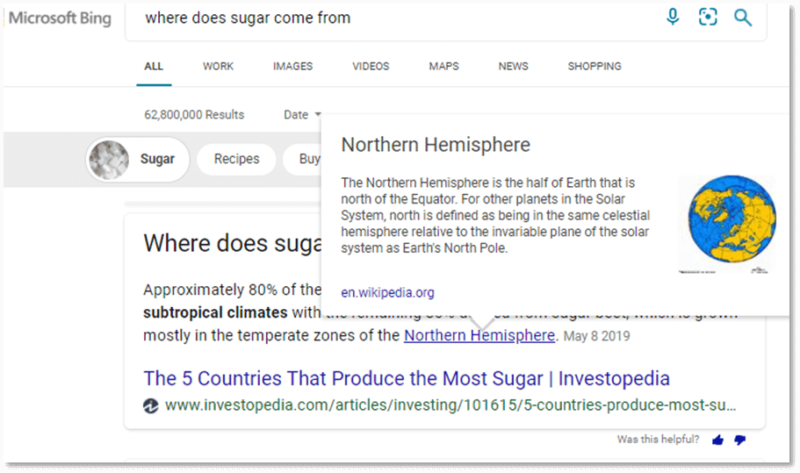

Not only that, but rivals at Bing are already using these techniques extensively. Their SERPs are bursting with contextual links pulling images, copy, and clickable links into the SERPs from Wikipedia.

This suggests to me that contextual links are likely to become a Google thing in the near future.

How might you be able to optimize for this possible feature development? In my humble opinion, it is worth spending some time investing in knowledge hub-style content. Hubs to enable you to become a reference for users on your own site and the wider web. While it is likely that much of the traffic for potential contextual links would go to reference sites like Wikipedia, it is also the case that not every niche term or topic will have a wiki page. So, if you start building now, you could be adding value for current users and future needs of Google’s bots.

A knowledge hub can be technically simple, or complex, but should be underpinned with good on-page SEO and unique content that is written in natural language.

3. Stay ready so you don’t have to get ready (with structured data)

At the top of the SERP plain blue links are becoming increasingly rare and today your search results are likely to include a mix of links and information from Google managed channels like:

- Google for Jobs

- Video snippets, predominantly from Google-owned YouTube

- Structured Data enabled Rich Results as we see the recipe cards and/or Google Ads

These features are generated using Google APIs, YouTube, services liked Google Ads, and also largely through Structured Data specifications. This serves them well because they deliver the information with a more consistent user experience which is particularly crucial in the constraints of a mobile-first web.

I bring this up in a discussion about SERP resilience because, as these new and shiny features are added, they take the place of plain blue links and, historically, they have been seen to replace Featured Snippets.

For instance, we saw a significant drop in Featured Snippets in 2017 as Google-managed Knowledge Panels increased.

During this time, one of the most prominent Featured Snippet category types was for recipes. But Google soon found a more user-friendly way to display this content via mobile-friendly Rich Results.

Now, you might say, Well 2017 was a long time ago, but we’ve seen similar activity this year in February when Moz reported that as the number of Featured Snippets temporarily dropped to historic lows, we also saw a rise in rich results for video at the same time.

And though many of the Featured Snippets returned, the phenomena of SERPs neither being created or destroyed, but simply changing form is a regular occurrence. Even this Summer, it is the case that the prevalence of People Also Ask is steadily declining as Videos increase.

This means that Google SERP developments can cause traffic disruption for pages that are optimized for a single type of search result.

The TLDR of this is, don’t put all your eggs in one basket.

If you have a page that performs well as a top-ranking link, Feature Snippet or other feature, don’t expect that to be the case forever because the SERP itself, could completely change.

- Protect your traffic by optimizing your pages for relevant APIs and strategic structured data for your niche, alongside your on-page optimizations.

- Gain traffic by identifying competitors who are not using structured data and target your efforts accordingly.

- Monitor your niche for changes to Rich Results and Google features, plan accordingly. This will include many of your regular tools, but also manually reviewing the SERP to understand new and emerging elements.

4. Dig into core topics for passage ranking

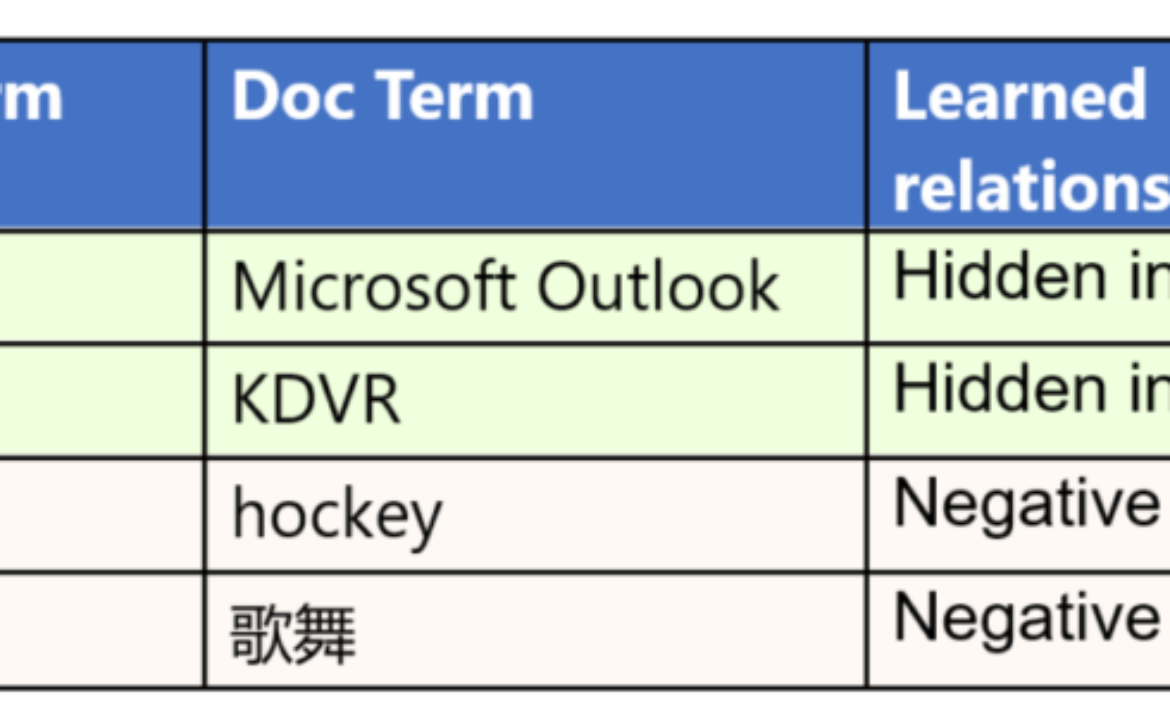

Google’s commitment to natural language processing within its algorithms gained pace in the last 12 months when Google introduced Passage Ranking at autumn’s Search On 2020 and MUM at Google I/O in Spring 2021.

Often confused with jump to text links, Google has explained that Passage Ranking is intended to help them to understand content more intelligently. Specifically to enable them to find ‘needle in a haystack’ passages that answer queries more accurately, even if the page as a whole is not particularly well-formatted.

The analogy that I often use is that, if we imagine that the SERP was a playlist of songs, then previously, the whole song would have to be strong to make it on to list. Passage Ranking is essentially saying that if the rest of the song is so-so, but the guitar solo is really, really good, then it’s still worth adding that song to the playlist.

On 10 Feb 2021, this update went live and Google said that it would affect 7% of searches and SEOs had a lot of questions:

- Will Passage Ranking affect what the SERPs look like?

- Will Passage Ranking affect what Featured Snippets look like?

- Will Passage Ranking affect Featured Snippets exclusively or only Feature Snippets?

Speaking with Barry Schwartz via Google’s Search Liaison, Danny Sullivan, said the answer was No, No, and No.

So why am I bringing this up in a discussion about SERPs?

Well, since Passage Ranking is now a contributing factor for ranking, and Featured Snippets are elevated from the top-ranking SERP results, in my opinion, we are likely to see more variation in the kinds of pages that achieve Featured Snippet status. So alongside pages that follow all of the content formatting best practices to the letter, we are likely to see more pages that are offering query satisfying information in a less polished way.

“The goal of this entire endeavor is to help pages that have great information kind of accidentally buried in bad content structure to potentially get seen by users that are looking for this piece of information” – Martin Splitt

Confronted with these results, SEOs who love an If X, Then Y approach may be perplexed but my research has led me to believe that one of the contributing factors is user intent.

Ranking shifts directly following the Passage Ranking update suggest that the content that was boosted sought to answer both the what and why behind the user queries. Case in point, a website that was traditionally optimized for the query different colors of ladybirds owned the Featured Snippet in January.

This page is optimized using many of the established SEO techniques

- Literally optimized for the search query

- Includes significant formatting optimizations

- Covers keyword topic directly to answer What are the different colors of Ladybirds

- Core Copy is around 500 words

But after the Passage Ranking update, the same query returned a page that was less literally optimized but provided better contextualization. This usurper showed a better understanding of why ladybirds were different colors and jumped from 5th to 1st position during February.

Reviewing the page itself, we see that in contrast to the earlier snippet, on this page

- Core Copy is over 1000 words

- Includes limited formatting

- Covers intent-based topic, in general, to answer Why

Other examples and other big movers during this time showed a similar correlation with intent-focused search results.

In each case, it seems that Google is attempting to think ahead about user intent replying to queries with less literal results to better satisfy the thought process behind the query. Their machine learning tools now allow Google to better understand topics as well as keywords.

So, what does this mean for SEOs?

Passage ranking looks like good news for long-form content

Well, where you have a genuine, unique perspective on a topic, Passage Ranking could be an incentive to create more thorough and in-depth content centered around users’ needs rather than search volume alone.

- Protect your traffic by optimizing your content for longtail keywords and intent.

- Gain snippet traffic by creating intent-focused content. Answer the so what and don’t be afraid of detail.

- Consider topics as well as keywords in content, navigation and customer journey.

From a technical SEO perspective, top tactics include solid internal link architecture optimized with long-form content templates with tables of contents.

How can you build SEO resilience for a dynamic SERP?

The same way you dress for a pumpkin spiced autumn day, with layers.

In this blog, I’ve discussed tactics for

- Optimizing content for mixed media Featured Snippet panel results

- Creating knowledge hubs for potential contextual linking developments

- Building structured data into your website before rich results arise

- Using Intent Focused Long Form content to potentially benefit from Passage Ranking

There is no single tactic that works in isolation. The SERP is so highly dynamic at the moment, that aiming for, or banking on a single part of the SERP is likely to leave you vulnerable to traffic disruption if/when things evolve. Think about how you can use these tactics to build upon and level up your existing SEO foundations. Change is the only constant, plan accordingly.

The post SERP trends of the rich and featured: Top tactics for content resilience in a dynamic search landscape appeared first on Search Engine Land.